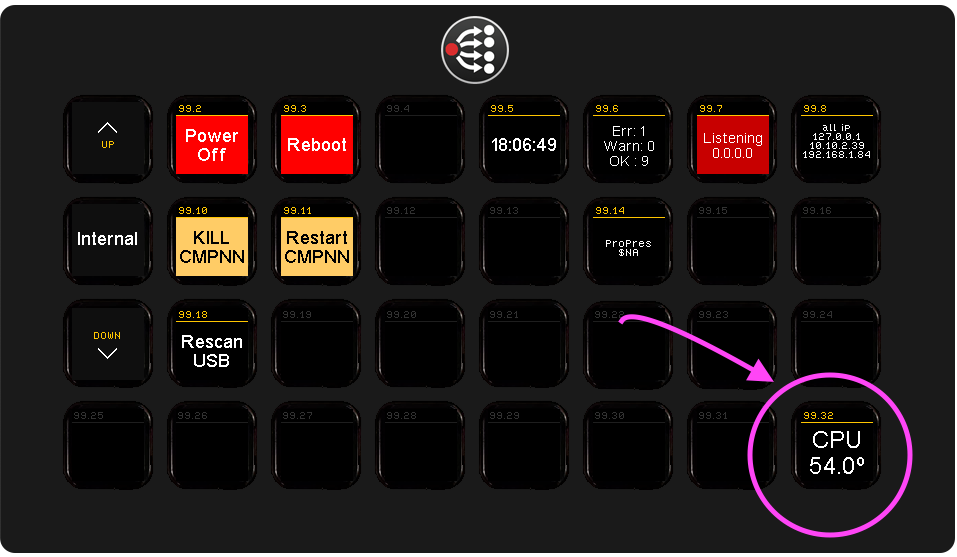

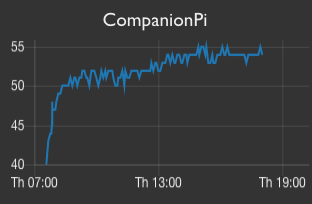

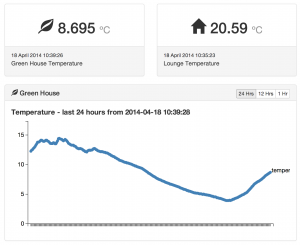

The realtime sensor display live!

In a previous post I talked about how I put together some temperature sensors to log temperature in the green house and lounge. The sensors use XRF radio modules to send the data back to a Raspberry Pi (also sporting an XRF module) and are running from a 3.3v button cell battery.

The XRF module on the Raspberry Pi is sending the messages from the sensors to the RPi’s serial port, and this is where we start to talk about code…

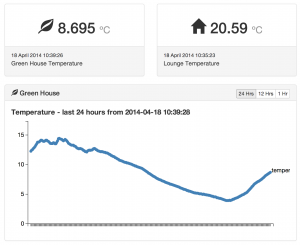

The plan was to build a realtime display of the data from the temperature sensors. You can now see the live temperature from our green house and lounge, along with a history of readings over the last 1, 12 and 24 hours.

The code I used is available in a public Github repo – but is considered to be ‘alpha’ code – in that it is not tested end to end or from build to deployment. So you use at your own risk and with an assumption that you’ll have to tinker with it to get things running for you.

The steps below give an overview of the architecture, and each of these steps is explained in more detail in the sections which follow. However, this is not intended to be an in-depth how-to guide.

- Sensor sends LLAP message to RPi serial port

- Python script is listening to the serial port every minute

- LLAP Messages from the sensors are parsed into a simple JSON document which is posted to a Firebase database

- Firebase is an event driven database where all additions and updates fire events.

- An AngularJS app (served from Amazon S3 backed storage) shows the latest readings in a live updating display by listening to those Firebase events.

Sensor Sends LLAP messages to RPi serial port.

This is the easy bit, as the hard work of programming the sensors is already done for you by the Ciseco people. The sensors send messages using a simple 12 character message system called Lightweight Logical Application Protocol. The actual sensor setup and connection to the RPi is covered in the previous article.

Python script listens to serial port

I wrote a small python module to parse LLAP messages into a simple JSON document which could then be stored or otherwise manipulated as necessary. The module is available as part of the Github repo. The LLAP python module treats every message received as an event, and if you have registered a callback to a particular type of LLAP message, every time that message is received your callback will be notified and whatever your callback does – it will do! The LLAP module simply deals with the parsing of a string of messages captured from a serial port, and passes those messages on to your callbacks. This means that you can react to each temperature reading or battery low message as and when that message arrives.

It is up to your callbacks to decide whether to make a note of the time the message was received, and what action to take based on the message. But using this method it would be simple to have a temperature change over or under some threshold trigger some action. For example, if it get’s too warm in the green house, a motorised window opener could be triggered to let in some fresh air.

The code which listens to the serial port and registers the callback is up to you to write, but you can see the code in the Github repo which I am using to listen to the sensor messages.

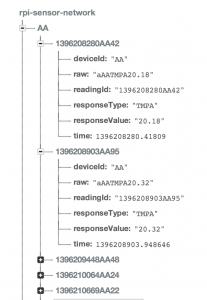

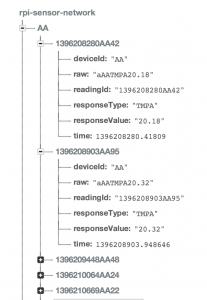

LLAP messages sent as JSON documents to a Firebase database

The readings as seen in the Firebase database.

This is where it starts to get fun!

Firebase is an awesome cloud based database which allows you to post and get data using simple HTTP requests. Because the database is event driven, and because it already has deep integrations with Angular JS, you can quickly build a responsive, data driven site which instantly responds to many events happening in different browsers all over the web. For our purposes – we want to show a live updating latest temperature displayed in a webpage – this is ideal.

The python code mentioned in the previous section simply takes the parsed LLAP message, adds a timestamp, a priority (used for ordering the result sets in Firebase) and a reading id which is just the timestamp float with the period (.) replaced with the sensor id (you can’t have periods in a Firebase key!). The resulting JSON object is then posted as a reading to the Firebase database.

Firebase is event based and fires events that you can monitor

Every time a new reading is added to the database by the python script, Firebase fires off some events to any other clients which are listening for those events.

This means that we can write a web app which listens to those events and updates it’s display with the new readings. This essentially means we can have a realtime display of the sensor readings.

So the next step is to build that interface…

AngularJS app to show readings in near realtime

In the Github repo, you’ll find the code for an AngularJS app which shows the sensor readings for the sensors in my network. Now it has to be said that the app has not been written to be generic, and if you decide to fork the repo to build your own, I suspect you’ll have to do a fair bit of ‘hacking’ to get it to work.

In the Github repo, you’ll find the code for an AngularJS app which shows the sensor readings for the sensors in my network. Now it has to be said that the app has not been written to be generic, and if you decide to fork the repo to build your own, I suspect you’ll have to do a fair bit of ‘hacking’ to get it to work.

The app was an opportunity for me to play with the following tools and what you see here was built in a weekend – just goes to show how useful these tools are.

- Yeoman – for building the initial angular and firebase app skeleton.

- Grunt – for automating some of the build and preview.

- Bower – for managing Javascript dependancies

- AngularJS – for realtime binding of variables in the HTML to variables which are fed directly from Firebase data.

- angularFire – for the abstraction of the code needed to actually talk to the Firebase database.

- Bootstrap 3 for reactive presentational elements to make it work on mobile and desktop.

I don’t pretend that this code is pretty – and there are no proper tests, but it works and it was fun to build!

Apologies

Finally, apologies to all those whom I have bored with recounting the current temperature in the green house!